Tech leaders debated how to bake responsibility into AI not just as a compliance afterthought but as a fundamental competitive edge.

Artificial intelligence is breathing new life into San Francisco, drawing comparisons to the dot-com days of Silicon Valley. Investment is pouring into startups, founders are scrambling like it’s 1999, and beneath it all, AI is transforming how people live and work. Yet the speed of change has raised urgent questions: How do we embed values into AI? Who ensures responsibility is not sacrificed for commercial gain?

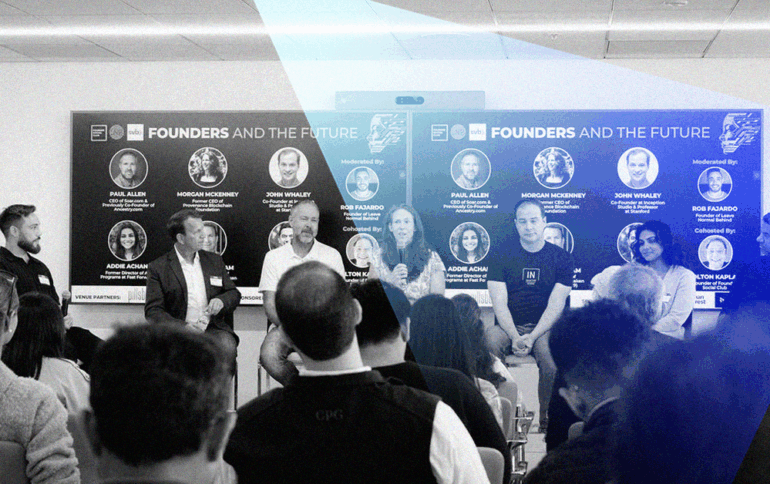

This is the tension that took center stage at Founders and the Future, a recent event hosted by Founder Social Club and Leave Normal Behind. Set against the backdrop of the Bay Bridge and Coit Tower, founders, investors, and academics debated how to balance innovation with responsibility.

Responsible Innovation as a Practice, Not a Goal

The panel agreed that “responsible innovation” cannot be treated as a fixed outcome. Instead, it must be a continuous methodology that startups apply from the earliest stages of development. Rather than relying on abstract guidelines, responsibility should be built into workflows, mindsets, and decision-making. This often means involving compliance and risk experts early in product design, which may slow development initially but in favor of creating stronger foundations of trust in the long term.

Morgan McKenney, former CEO of Provenance Blockchain Foundation, indicated as such, sharing that a mindset of “creative abrasion,” early collaboration, and diversity of thinking are critical.

“You must bring in your compliance and your design partners early in the process…design with people who see the risks, who see the consumer, and the blue sky thinker,” she said.

Without trust, panelists argued, the pace of innovation will not be sustainable.

Data Ownership as a Human Right

Data rights emerged as one of the most pressing concerns. Much of today’s AI depends on vast datasets, yet individuals rarely control how their data is collected or used. Paul Allen, CEO of Soar.com and co-founder of Ancestry.com reminded the room: “You are a human being. Your data belongs to you, the human being. It shouldn’t be controlled by the government. It shouldn’t be controlled by corporations.”

Several states, including Colorado, are considering legislation to increase consumer ownership over their personal data. This movement reframes data ownership as a human rights issue. The debate underscored the stakes: If AI systems require intimate personal data to function, individuals must have agency over their “digital DNA,” rather than ceding control to corporations or governments. Yet the present day reality of who owns and regulates consumer data — companies and governments — raised much discussion and concern by the panelists. Until ownership shifts, AI will keep being built on foundations out of users’ control.

Building Trusted AI

Trust surfaced again in the discussion of AI adoption. Surveys, including a 2025 KPMG report, show that while a majority of people use AI, fewer than half say they trust it. Bridging this gap requires building AI that is not only powerful but also transparent, fair, and accountable. This means including communities most affected by technology in the design process, adopting clear principles for how models are trained, and educating users on how AI interacts with their data. Responsible AI, the panel concluded, must be human-centered: designed to empower people rather than erode their autonomy.

Incentives and the Race Ahead

The conversation repeatedly turned to the systemic forces shaping AI. Competitive pressure, particularly with China’s rapid advances, makes slowing down unrealistic. With trillions of dollars flowing into AI development, panelists acknowledged that responsibility will often be the first thing sacrificed in the push to scale. The challenge, then, is to reframe responsibility as a competitive advantage rather than compliance overhead.

Gaurab Bansal, Executive Director of Responsible Innovation Labs, argued that just as manufacturing once shifted to compete on quality, the AI industry must learn to compete on responsibility. That cultural and behavioral change, while difficult, will determine whether AI evolves in ways that truly benefit society.

Designing the Future Now

The paradox of this moment is we’re witnessing extraordinary opportunity paired with extraordinary risk. AI promises breakthroughs in productivity, medicine, and human potential. However, without thoughtful governance, incentives, and design, it also threatens to deepen inequality, entrench bias, and strip individuals of agency.

The question is no longer whether AI will shape the future, but how. As John Waley, co-founder at Inception Studio and professor at Stanford University, said: “There’s no stopping this runaway train. Given this is happening, how do we do this in a responsible way?”

Responsibility cannot be bolted on after the fact. It must be embedded from the start: in how companies structure their teams, in how governments legislate, and in how individuals insist on owning their data and their choices.

Moderator Rob Fajardo, founder of Leave Normal Behind, reminded the room: “We’re designing the future in the present moment.”