Across traditional professions, a wave of AI optimism is beginning to take hold; in healthcare, AI is already proving instrumental in easing clinician burnout and reducing admin overhead, while in finance, it’s unlocking strategic insight at scale. Even education is adapting fast: As reported by The Guardian, Ohio State University will now require all graduates to be “fluent in AI,” a clear signal that institutions are retooling for a future where AI literacy is no longer optional.

But until we redesign the structures we work within, and contemplate carefully the role of humanity in an AI-first world, AI adoption will continue to look uneven, even paradoxical.

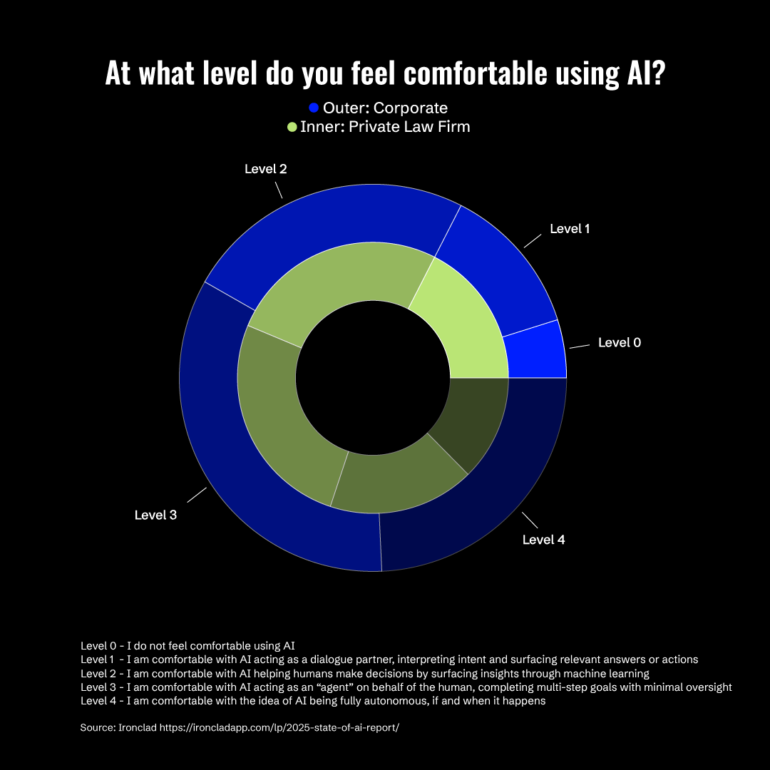

Exhibit A: A curious split in the legal world this week reveals where AI is gaining real traction and who is ready to operationalize it.

In Ironclad’s 2025 State of AI report, lawyers show a striking level of comfort with AI, with 1 in 5 being completely fine with the idea of fully autonomous systems making decisions without human intervention. More interestingly, corporate lawyers were twice as likely than private law firms (34% to 17%, at Level 3 above) to be comfortable with AI acting as an “agent” on behalf of humans.

At first glance, this might seem like a niche professional difference. But looking closer, it reflects a broader truth about how trust in AI maps to structure and incentives.

Inside corporations, legal teams are under pressure to move fast, cut costs, and do more with less. AI helps in hitting those goals. At law firms—where billing by the hour is still king—there’s less upside in speeding up the work. So AI adoption slows. Not because lawyers fear AI but because the business model isn’t built for it.

The kicker here is that the legal profession, often painted as conservative and tech-averse, is also showing signs of optimism. The same report showed 76% of respondents agreed AI is helping reduce burnout, and 64% shared it helps communicate better with stakeholders—which alone accounts for ~6.2 hours/week in this profession. The jury is still out on AI’s impact on job security, with half believing it will create more job opportunities.

What does this tell us?

The discourse surrounding trust in AI is more than just whether or not to trust it. It’s also about why it is or isn’t trusted. Where AI aligns with incentives (i.e., it complements workers rather than threatening their value), it’s embraced. Where it undermines the economic engine, it’s viewed with caution.

Looking beyond law, you may find similar divides between startups and legacy enterprises, in-house teams and consultants, operational roles and billable ones.

The tech is ready. But the systems and world we’ve built around it? Still in dev.

The Editors